- About us

- Contact us: +1.641.472.4480, hfi@humanfactors.com

Cool stuff and UX resources

Introduction

We’ve got a usability test day coming up and we’ve planned on five participants. That’s the magic number to find most of the important problems, right?

During design, UX teams do formative testing – focusing on qualitative data and learning enough to move the design forward. But how many participants are needed to have confidence in the test results? That debate’s been going for a quarter-century.

Your design team can learn a lot from just a few usability participants, but only if you are prepared to act on those results, redesign, and retest. Also, not all critical problems are found easily with just a few people – so if you’re working on a complex system or one where lives are at stake, you’ll want to be more thorough.

Moreover, there’s a statistical approach to estimating whether a few participants are enough, or whether it takes more of them to discover usability problems. Jeff Sauro and James Lewis (2012) discuss usability problem discoverability, do sample size calculations, and helpfully summarize them in table form. We’ll share those here.

Finally, we’ll review factors to consider in assessing whether your design has problems that are easier or harder to discover.

The magic number 5?

Five users can be enough – if problems are somewhat easy to discover, and if you don’t expect to find all problems with just one test.

Problem discoverability (here, p) is the likelihood that at least one participant will encounter the problem during usability testing. Nielsen and Landauer (1993; see also Nielsen, 2012) found on average p=0.31 for the set of projects studied. Based on that, 5 users would be expected to find 85% of the usability problems available for discovery in that test iteration.

Similarly, Virzi (1992) created a model based on other usability projects, finding p between 0.32 and 0.42. Therefore, 80% of the usability problems in a test could be detected with 4 or 5 participants.

And so a UX guideline was born. For the best return on investment, test with 5 users, find the majority of problems, fix them, and retest. For wider coverage, vary the types of users and the tasks tested in subsequent iterations.

Problem discoverability and sample size

But the “5-user assumption” doesn’t hold up consistently. Faulkner (2003), using yet another benchmark task, found that although tests with 5 users revealed an average of 85% of usability problems, percentages ranged from nearly 100% down to only 55%. Groups of 10 did much better, finding 95% of the problems with a lower bound of 82%.

Perfetti and Landesman (2001) tested an online music site. After 5 users, they’d found only 35% of all usability issues. After 18 users, they still were discovering serious issues and had uncovered less than half of the 600 estimated problems. Spool and Schroeder (2001) also reported a large-scale website evaluation for which 5 participants were nowhere near discovering 85% of the problems.

Why the discrepancy? Blame low problem discoverability, p.

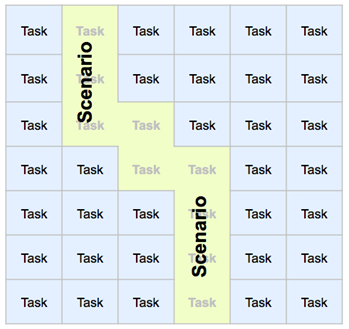

First, large and complex systems can’t be covered completely during a 1-hour usability test. We often just have users going through a few important scenarios, out of dozens, encountering a handful of tasks in each scenario.

Second, even once we’ve focused on important scenarios, some are relatively unstructured. That lack of structure contributes to low p. When participants have more variety of paths to an end goal, they have a greater number of problems available for discovery along the way, so any given problem is less likely to be found.

Using problem discoverability to plan sample size

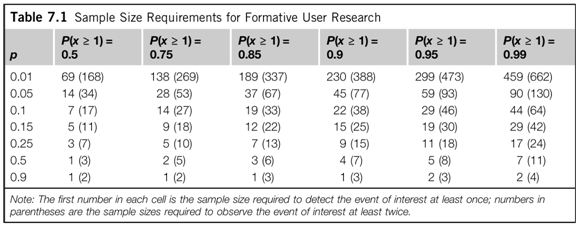

Sauro and Lewis (2012) provide tables to help researchers plan sample size with problem discoverability in mind. Table 7.1 (p. 146) shows sample size requirements as a function of problem occurrence probability, p, and the likelihood of detecting the problem at least once, P(x≥1).

For example, if you were interested in slightly harder-to-find problems (p=0.15) and wanted to be 85% sure of finding them, you’d need to have 12 participants in the test. Notice how quickly sample sizes grow for the most difficult-to-find problems and the greatest certainty of finding them!

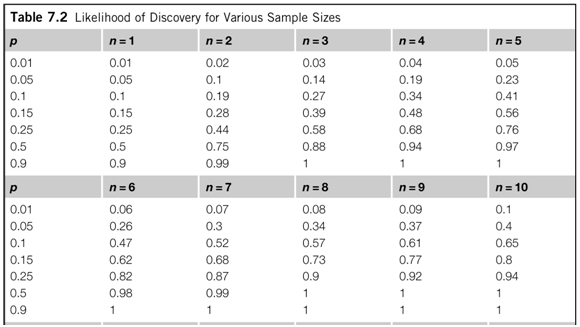

Another way to look at the tradeoffs is with Table 7.2 (p. 147, excerpt below). For a given sample size, how well can you do at detecting different kinds of problems? With 10 participants, you can expect to find nearly all of the moderately frequently occurring problems (p=0.25, 94%), less than half of more rare problems (p=0.05, 40%), and so on.

Note that if you expect your problems in general to be harder to find – as for Perfetti and Landesman – you’ll want more participants in your studies.

But how discoverable are your design’s problems?

Sauro and Lewis (p. 153) give a method for bootstrapping an estimate of p: test with two participants, note the number and overlap in discovered problems, adjust the estimated sample size, then repeat after four participants.

But pragmatically? On a design project we’re typically using “t-shirt sizing” for test sample sizes: small (~5), medium (~10), or large (~25). For formative testing, where we want to learn, iterate, and keep the design moving forward, that typically means choosing between a small and medium sample size for any given test.

What factors increase discoverability and make small sample size work? Sauro and Lewis list several, and our experience suggests others:

- Expert test observers.

- Multiple test observers.

- New products with newly designed interfaces, rather than more matured and refined designs.

- Less-skilled participants; more knowledgeable participants can work around known problems.

- A homogeneous (but still representative) set of participants.

- Structured tasks, where there’s a path along which problems are discovered.

- Coverage. For a larger design, include a wide-ranging set of tasks, both simple and complex.

- Alternatively, focus a small test on just one design aspect, iterate quickly, and move to the next issue (see Schrag, 2006).

Small tests: Doing them RITE

Formative testing is worthless if the design doesn’t change. Whether the method is called discount, Lean, or Agile, small tests are intended to deliver design guidance in a timely way throughout development.

But do decision-makers agree that the problems found are real? Are resources allocated to fix the problems? Can teams be sure that the proposed solutions will work? If not, it doesn’t matter how cheaply you’ve done the test; it has zero return on investment.

Medlock and colleagues at Microsoft (2005) addressed process issues head-on with their Rapid Iterative Testing and Evaluation (RITE) method. Read the full article for their clear description of the depth of involvement and speed of response required from the development organization. Rapid small-n iterative testing is a full-contact team sport.

Focusing on testing methodology itself, Medlock et al. listed specific requirements for RITE, including:

- Firm agreement on tasks all participants must be able to perform.

- Ability of the usability engineer to assess whether a problem observed once is “reasonably likely to be a problem for other people,” which requires domain and problem experience.

- Quick prioritization of issues according to obviousness of cause and solution. Don’t rush to make all changes quickly; poorly solved issues can “break” other parts of the user experience.

- Incorporating fixes as soon as possible, even if after just one participant.

- Commitment to run follow-up tests with as many participants as needed to confirm the fixes.

Found a problem! So is it real?

One of our five participants had a problem. Should we fix it? Or is it an idiosyncrasy, a glitch, a false positive?

Usability expertise plays an important role in weighing evidence and assessing the root cause of the problem. Sauro and Lewis note the benefits of expert (and multiple) observers. The RITE method relies on a usability specialist who is also expert in the domain.

The more subtle the problem, the more the team needs someone who can persuasively say, “Based on what I know of human performance, and of users’ behavior with similar interfaces, this really is (or isn’t) an issue.”

Of course, given small and relatively inexpensive tests, the team can fix obvious problems and then collect more data on edge cases in the next iteration.

Medium and large tests: Risk management

Small iterative usability tests reduce certain kinds of risks: timeliness of feedback, impact of feedback, and time to market. In particular, small tests early in the design process can help the team converge more quickly on usable designs.

But when lives are at stake, there are other risks to consider. Tests must be designed to find uncommon but critical usability problems. In Sauro and Lewis’ analysis, this means consulting columns of the sample size table where the likelihood of detecting problems at least once, P(x≥1), is large, and sample sizes increase.

For example, take medical devices. At the end of product development it’s important to confirm that the device is safe and effective for human use. That is a summative or validation test, and requires about 25 participants per user group (FDA, 2011).

Even during formative testing sample sizes closer to 10 are more common (Wiklund, 2007). We know one researcher who routinely tests 15 or 20 participants per group. This mitigates two types of risks: not only the use-safety risk to the human user, but also the business risk of missing critical problems, failing validation, and having to wait months to re-test and resubmit the product for regulatory approval!

Those of us developing unregulated systems and products might still choose larger sample sizes of 8-10. The larger number may be more persuasive to stakeholders. It may allow us to handle more variability within each user group. Or it may be a small incremental cost to the project (Nielsen, 2012).

If you work for a centralized UX group that isn’t integrated as tightly with a given development team as an iterative discount method requires – or you’re a consultant hired only as needed – you need to get the most from that usability testing opportunity. Consider using a larger sample size.

Small, medium, large: What size of test fits you?

The “magic” number 5 is not magic at all. It depends on assumptions about problem discoverability and has implications for design process and business risk. Assess these three factors to determine whether five participants is enough for you:

- Are problems hard to find? Are the participants expert, the usability engineer less familiar with the domain, or the system complex and flexible?

- Is your organization equipped to do iterative small-n tests? Are you set up for ongoing recruitment-and-test operations, committed to iteration, and able to deliver software changes rapidly?

- What are the safety and business risks of missing uncommon problems in your design?

At the very least, make sure to assess how variable your participants are. If they differ significantly in expertise or in typical tasks, recruit at least five participants per user group.

Happy testing!

References

- Faulkner, L. (2003). Beyond the five-user assumption: Benefits of increased sample sizes in usability testing. Behavior Research Methods, Instruments, and Computers, 35(3), 379-383.

- FDA (2011, June 22). Draft Guidance for Industry and Food and Drug Administration Staff – Applying Human Factors and Usability Engineering to Optimize Medical Device Design. Retrieved from http://www.fda.gov/.

- Medlock, M.C., Wixon, D., McGee, M., and Welsh, D. (2005). The rapid iterative test and evaluation method: Better products in less time. In: Bias, R.G. and Mayhew, D.J. (Eds.), Cost-Justifying Usability: An Update for the Internet Age. Elsevier: Amsterdam, Netherlands. 489–517.

- Nielsen, J. (2012, June 4). How many test users in a usability study? Retrieved from http://www.nngroup.com.

- Nielsen, J. & Landauer, T.K. (1993). A mathematical model of the finding of usability problems. Proceedings of ACM INTERCHI’93 Conference (Amsterdam, The Netherlands, 24-29 April 1993), 206-213.

- Perfetti, C., & Landesman, L. (2001, June 18). Eight is not enough. Retrieved from http://uie.com.

- Sauro, J. & Lewis, J. R (2012). Quantifying the user experience: Practical statistics for user research. Waltham, MA: Morgan Kaufmann.

- Schrag, J. (2006, June 15). Using formative usability testing as a fast UI design tool. Retrieved from http://dux.typepad.com.

- Spool, J., & Schroeder, W. (2001). Testing web sites: Five users is nowhere near enough. In CHI 2001 Extended Abstracts. New York: ACM Press, 285-286.

- Virzi, R.A. (1992). Redefining the test phase of usability evaluation: How many subjects is enough? Human Factors, 34, 457-468.

- Wiklund, M. (2007, October 1). Usability testing: Validating user interface design. Retrieved from http://mddionline.com.

Message from the CEO, Dr. Eric Schaffer – The Pragmatic Ergonomist

Leave a comment here

Subscribe

Sign up to get our Newsletter delivered straight to your inbox

Privacy policy

Reviewed: 18 Mar 2014

This Privacy Policy governs the manner in which Human Factors International, Inc., an Iowa corporation (“HFI”) collects, uses, maintains and discloses information collected from users (each, a “User”) of its humanfactors.com website and any derivative or affiliated websites on which this Privacy Policy is posted (collectively, the “Website”). HFI reserves the right, at its discretion, to change, modify, add or remove portions of this Privacy Policy at any time by posting such changes to this page. You understand that you have the affirmative obligation to check this Privacy Policy periodically for changes, and you hereby agree to periodically review this Privacy Policy for such changes. The continued use of the Website following the posting of changes to this Privacy Policy constitutes an acceptance of those changes.

Cookies

HFI may use “cookies” or “web beacons” to track how Users use the Website. A cookie is a piece of software that a web server can store on Users’ PCs and use to identify Users should they visit the Website again. Users may adjust their web browser software if they do not wish to accept cookies. To withdraw your consent after accepting a cookie, delete the cookie from your computer.

Privacy

HFI believes that every User should know how it utilizes the information collected from Users. The Website is not directed at children under 13 years of age, and HFI does not knowingly collect personally identifiable information from children under 13 years of age online. Please note that the Website may contain links to other websites. These linked sites may not be operated or controlled by HFI. HFI is not responsible for the privacy practices of these or any other websites, and you access these websites entirely at your own risk. HFI recommends that you review the privacy practices of any other websites that you choose to visit.

HFI is based, and this website is hosted, in the United States of America. If User is from the European Union or other regions of the world with laws governing data collection and use that may differ from U.S. law and User is registering an account on the Website, visiting the Website, purchasing products or services from HFI or the Website, or otherwise using the Website, please note that any personally identifiable information that User provides to HFI will be transferred to the United States. Any such personally identifiable information provided will be processed and stored in the United States by HFI or a service provider acting on its behalf. By providing your personally identifiable information, User hereby specifically and expressly consents to such transfer and processing and the uses and disclosures set forth herein.

In the course of its business, HFI may perform expert reviews, usability testing, and other consulting work where personal privacy is a concern. HFI believes in the importance of protecting personal information, and may use measures to provide this protection, including, but not limited to, using consent forms for participants or “dummy” test data.

The Information HFI Collects

Users browsing the Website without registering an account or affirmatively providing personally identifiable information to HFI do so anonymously. Otherwise, HFI may collect personally identifiable information from Users in a variety of ways. Personally identifiable information may include, without limitation, (i)contact data (such as a User’s name, mailing and e-mail addresses, and phone number); (ii)demographic data (such as a User’s zip code, age and income); (iii) financial information collected to process purchases made from HFI via the Website or otherwise (such as credit card, debit card or other payment information); (iv) other information requested during the account registration process; and (v) other information requested by our service vendors in order to provide their services. If a User communicates with HFI by e-mail or otherwise, posts messages to any forums, completes online forms, surveys or entries or otherwise interacts with or uses the features on the Website, any information provided in such communications may be collected by HFI. HFI may also collect information about how Users use the Website, for example, by tracking the number of unique views received by the pages of the Website, or the domains and IP addresses from which Users originate. While not all of the information that HFI collects from Users is personally identifiable, it may be associated with personally identifiable information that Users provide HFI through the Website or otherwise. HFI may provide ways that the User can opt out of receiving certain information from HFI. If the User opts out of certain services, User information may still be collected for those services to which the User elects to subscribe. For those elected services, this Privacy Policy will apply.

How HFI Uses Information

HFI may use personally identifiable information collected through the Website for the specific purposes for which the information was collected, to process purchases and sales of products or services offered via the Website if any, to contact Users regarding products and services offered by HFI, its parent, subsidiary and other related companies in order to otherwise to enhance Users’ experience with HFI. HFI may also use information collected through the Website for research regarding the effectiveness of the Website and the business planning, marketing, advertising and sales efforts of HFI. HFI does not sell any User information under any circumstances.

Disclosure of Information

HFI may disclose personally identifiable information collected from Users to its parent, subsidiary and other related companies to use the information for the purposes outlined above, as necessary to provide the services offered by HFI and to provide the Website itself, and for the specific purposes for which the information was collected. HFI may disclose personally identifiable information at the request of law enforcement or governmental agencies or in response to subpoenas, court orders or other legal process, to establish, protect or exercise HFI’s legal or other rights or to defend against a legal claim or as otherwise required or allowed by law. HFI may disclose personally identifiable information in order to protect the rights, property or safety of a User or any other person. HFI may disclose personally identifiable information to investigate or prevent a violation by User of any contractual or other relationship with HFI or the perpetration of any illegal or harmful activity. HFI may also disclose aggregate, anonymous data based on information collected from Users to investors and potential partners. Finally, HFI may disclose or transfer personally identifiable information collected from Users in connection with or in contemplation of a sale of its assets or business or a merger, consolidation or other reorganization of its business.

Personal Information as Provided by User

If a User includes such User’s personally identifiable information as part of the User posting to the Website, such information may be made available to any parties using the Website. HFI does not edit or otherwise remove such information from User information before it is posted on the Website. If a User does not wish to have such User’s personally identifiable information made available in this manner, such User must remove any such information before posting. HFI is not liable for any damages caused or incurred due to personally identifiable information made available in the foregoing manners. For example, a User posts on an HFI-administered forum would be considered Personal Information as provided by User and subject to the terms of this section.

Security of Information

Information about Users that is maintained on HFI’s systems or those of its service providers is protected using industry standard security measures. However, no security measures are perfect or impenetrable, and HFI cannot guarantee that the information submitted to, maintained on or transmitted from its systems will be completely secure. HFI is not responsible for the circumvention of any privacy settings or security measures relating to the Website by any Users or third parties.

Correcting, Updating, Accessing or Removing Personal Information

If a User’s personally identifiable information changes, or if a User no longer desires to receive non-account specific information from HFI, HFI will endeavor to provide a way to correct, update and/or remove that User’s previously-provided personal data. This can be done by emailing a request to HFI at hfi@humanfactors.com. Additionally, you may request access to the personally identifiable information as collected by HFI by sending a request to HFI as set forth above. Please note that in certain circumstances, HFI may not be able to completely remove a User’s information from its systems. For example, HFI may retain a User’s personal information for legitimate business purposes, if it may be necessary to prevent fraud or future abuse, for account recovery purposes, if required by law or as retained in HFI’s data backup systems or cached or archived pages. All retained personally identifiable information will continue to be subject to the terms of the Privacy Policy to which the User has previously agreed.

Contacting HFI

If you have any questions or comments about this Privacy Policy, you may contact HFI via any of the following methods:

Human Factors International, Inc.

PO Box 2020

1680 highway 1, STE 3600

Fairfield IA 52556

hfi@humanfactors.com

(800) 242-4480

Terms and Conditions for Public Training Courses

Reviewed: 18 Mar 2014

Cancellation of Course by HFI

HFI reserves the right to cancel any course up to 14 (fourteen) days prior to the first day of the course. Registrants will be promptly notified and will receive a full refund or be transferred to the equivalent class of their choice within a 12-month period. HFI is not responsible for travel expenses or any costs that may be incurred as a result of cancellations.

Cancellation of Course by Participants (All regions except India)

$100 processing fee if cancelling within two weeks of course start date.

Cancellation / Transfer by Participants (India)

4 Pack + Exam registration: Rs. 10,000 per participant processing fee (to be paid by the participant) if cancelling or transferring the course (4 Pack-CUA/CXA) registration before three weeks from the course start date. No refund or carry forward of the course fees if cancelling or transferring the course registration within three weeks before the course start date.

Cancellation / Transfer by Participants (Online Courses)

$100 processing fee if cancelling within two weeks of course start date. No cancellations or refunds less than two weeks prior to the first course start date.

Individual Modules: Rs. 3,000 per participant ‘per module’ processing fee (to be paid by the participant) if cancelling or transferring the course (any Individual HFI course) registration before three weeks from the course start date. No refund or carry forward of the course fees if cancelling or transferring the course registration within three weeks before the course start date.

Exam: Rs. 3,000 per participant processing fee (to be paid by the participant) if cancelling or transferring the pre agreed CUA/CXA exam date before three weeks from the examination date. No refund or carry forward of the exam fees if requesting/cancelling or transferring the CUA/CXA exam within three weeks before the examination date.

No Recording Permitted

There will be no audio or video recording allowed in class. Students who have any disability that might affect their performance in this class are encouraged to speak with the instructor at the beginning of the class.

Course Materials Copyright

The course and training materials and all other handouts provided by HFI during the course are published, copyrighted works proprietary and owned exclusively by HFI. The course participant does not acquire title nor ownership rights in any of these materials. Further the course participant agrees not to reproduce, modify, and/or convert to electronic format (i.e., softcopy) any of the materials received from or provided by HFI. The materials provided in the class are for the sole use of the class participant. HFI does not provide the materials in electronic format to the participants in public or onsite courses.